Sheref Morad is an investigative data journalist based in Egypt, specializing in human digital rights and algorithmic accountability, focusing on uncovering AI crimes, social media biases, disinformation, and their impact on public discourse in the Middle East.

Automated Siege: The Role of Algorithms in Shaping the Narrative on Palestinian Content

In 2021, the Sheikh Jarrah dispute inspired well-known social media initiatives like #SaveSheikhJarrah, drawing attention to the forcible relocation of Palestinian residents. In response, Israeli officials have taken several actions in the digital space to counter what they perceived to be a surge of anti-Israel propaganda. Indeed, Israeli leaders responded swiftly and forcefully to this display of digital solidarity because they understood the important role these online movements played in influencing public opinion globally and maintaining stability at home.

One of the most significant steps in the Israeli response is their attempt to get Facebook and Twitter to filter pro-Palestinian content. As The Arab Center Washington DC explains, Israeli authorities frequently request tech companies and social media platforms such as Facebook, Google, and YouTube to remove pro-Palestinian content and accounts; in May 2021, Israeli authorities held a meeting with Facebook and TikTok executives and asked them to remove content that “incites to violence or spreads disinformation” as well as reiterated the importance of responding to the Israeli cyber bureau’s requests. At the same time, popular Facebook pages such as "Save Sheikh Jarrah" were taken down, and Instagram accounts of high-profile activists such as Muna El-Kurd, who documented her experiences in Sheikh Jarrah, were temporarily suspended.

This suppression, however, did not end with Sheikh Jarrah. Organizations like The Arab Center for the Advancement of Social Media (7amleh) and Human Rights Watch have documented ongoing, systemic digital repression targeting Palestinian voices, particularly following the outbreak of the Gaza war on October 7, 2023. A Human Rights Watch report on Meta’s censorship of Palestinian content states that since then, out of the 1,050 cases reviewed for the report, Facebook and Instagram restricted over 1,049 pieces of pro-Palestinian content, compared to just one piece of pro-Israeli content. These numbers underscore an entrenched bias in content moderation policies. Similarly, according to this briefing by 7amleh, there were over 1,009 documented cases of censorship, hate speech, and incitement to violence have been identified across platforms since October 7, 2023. These cases predominantly involve Palestinian content, which is disproportionately flagged and removed under the pretence of violating community standards.

Palestinian media outlets also face significant challenges. Mr. Muammar Orabi, editor-in-chief of Watan Media Network in Ramallah, described in a virtual interview how their pages have been subjected to algorithmic repression since the events of Sheikh Jarrah, with suppression intensifying after October 7, 2023. Palestinian journalists, activists, and ordinary users consistently encounter the same barrier: their narratives are suppressed under the pretext of violating community standards, further marginalizing their voices in the global discourse. As reflected in the algorithmic moderation patterns we see on Facebook and Instagram, Palestinian voices and content continue to be suppressed online, which goes against the policies of the platforms and their neutrality in international conflicts.

How are algorithms and content moderation policies shaping stories?

Whose stories are widely shared and whose voices are ignored are largely determined by algorithms. Based on wide-ranging factors including perceived sensitivity, relevancy, and interaction potential, these algorithms decide what people see on social networking sites like Facebook. This technique promotes highly popular information and, as a result, actively affects public narratives. High-engagement content (likes, shares, and comments) is given preference and is advertised. Moreover, these algorithms hyper-personalize each user's feed by leveraging extensive networks of data about user preferences, engagement trends, and content themes. Politically delicate content, including viewpoints from Palestinians, frequently has a difficult time getting noticed and is sometimes deprioritized or even removed. This gatekeeping function affects the digital presence of marginalized voices, limiting the reach of those advocating for Palestinian rights.

In addition to the weaponization of algorithms, the suppression of Palestinian content also occurs through biased content moderation policies of tech companies and social media sites. Facebook's moderation practices, for example, have drawn criticism for allegedly censoring pro-Palestinian content, as activists frequently report that posts related to their experiences and solidarity movements are flagged or removed under broad community standards, effectively muting crucial conversations and limiting organic spread. Palestinian journalists and activists often find their posts removed or accounts suspended for sharing stories of war crimes, displacements, or the humanitarian crisis in Gaza. This is especially troubling because their work provides critical insights into a conflict often misrepresented or underreported in mainstream media.

When faced with accusations of bias, Facebook has often attributed the suppression of Palestinian content to technical glitches – though the high volume and timing of these removals, especially during periods of heightened conflict, raise doubts and lead many to suspect a deeper, systematic bias against Palestinian voices. Within Facebook, some employees have also expressed concern over these practices, calling for a more balanced approach and noting discrepancies in how Palestinian and pro-Israel content are treated.

Ghada Sadek, an Egyptian physician, was suspended for 24 hours for allegedly "violating Facebook's standards" after sharing a video of an Israeli drone attack on a young guy on Facebook. The post was removed within hours. In a similar vein, Moroccan journalist Saida Mallah was similarly suspended on Instagram after sharing pictures from the "Tents Holocaust" attack in Rafah, which claimed the lives of forty-five Palestinians, including women and children. Beyond these single events, both Ghada and Saida noticed a concerning trend: posts showing or referring to violence against Palestinians saw a sharp drop in visibility, reaching far fewer people than previously.

For journalists and activists like Ghada and Saida, this suppression isn’t just an inconvenience—it is an erasure of their efforts to document reality and amplify marginalized voices. Whether they reveal human rights abuses or war atrocities, their posts offer critical viewpoints that subvert prevailing beliefs. However, as their visibility decreases – even despite continued engagement from followers – so does the possibility for these stories to reach a larger global audience. This algorithmic gatekeeping does more than limit free expression—it distorts global discourse, depriving the world of a full and accurate understanding of the Palestinian experience.

Our methodological approach

Our analysis indicates that these personal accounts are emblematic of a broader trend. By tracking how posts were shared and engaged during the Gaza War, we observed a systematic suppression of pro-Palestinian content. Using key indicators such as reach—the number of times a post is viewed—and engagement—interactions such as likes, shares, and comments—we identified that content containing politically sensitive terms like “Palestine” and “Gaza” was disproportionately affected. This suppression was evident in reduced visibility, even for posts with strong audience engagement.

The data for this analysis was gathered by the Meltwater Data Team, utilizing their advanced tools to monitor social media activity on platforms such as Facebook. Metrics on reach, engagement, and keyword relevance were extracted from key posts and pages.

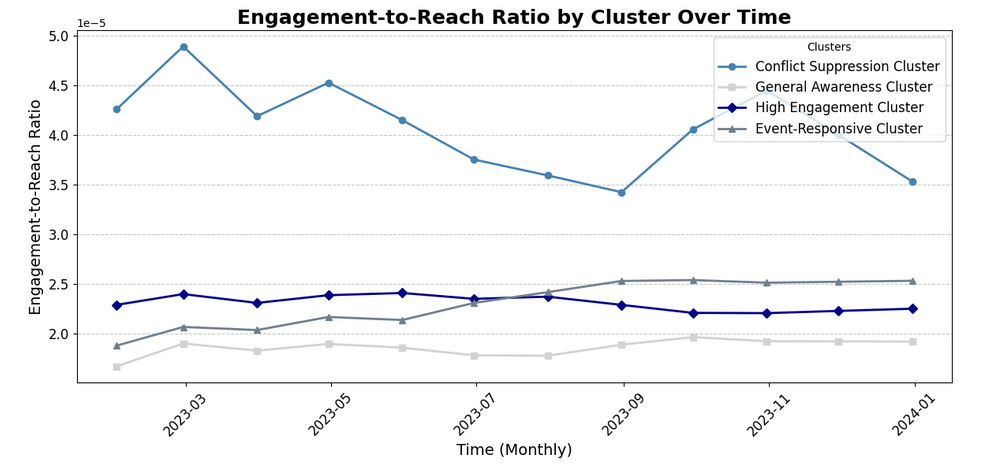

The collected data was then analyzed using Excel and Python. Excel facilitated data cleaning and visualization, while Python enabled advanced statistical testing and clustering. Libraries such as Pandas and Scipy were employed for data manipulation and hypothesis testing. To uncover patterns in content suppression, k-means clustering was applied, grouping posts based on their reach and engagement metrics.

This structured approach ensured that our findings were based on reliable data and robust analytical techniques, providing a detailed picture of how algorithms influence the visibility of pro-Palestinian content. Suppression became particularly pronounced during key events, such as when the Gaza War broke out on October 7, 2023; pro-Palestinian posts that initially gained traction began to lose visibility precisely when these narratives were most crucial. Our analysis of weekly and bi-weekly patterns revealed that posts with keywords related to the conflict consistently clustered into low-reach groups, even when audience interaction remained strong. This highlighted how moderation algorithms deprioritize politically sensitive content, limiting its reach regardless of user interest.

Due to limited pre-October data, the analysis centred on post-conflict content, ensuring that observed patterns accurately reflected recent moderation behaviors. This methodological approach allowed us to uncover potential algorithmic bias in real-time and illustrated how social media platforms may influence the digital presence of certain narratives through differential visibility and engagement.

Automated siege on Palestine

The digital landscape for Palestinian narratives is being controlled by algorithms that decide what content is shown and what is hidden. By examining reach—the number of views—and engagement—likes, shares, and comments—we observed a clear trend: posts containing these sensitive keywords consistently experienced lower reach and engagement than other content. This happened even when posts resonated strongly with audiences, highlighting how algorithms appear to deprioritize certain narratives, irrespective of their importance or popularity. Statistical testing confirmed significant visibility reductions for these posts, suggesting that keywords tied to the Palestinian narrative can serve as a trigger for algorithmic downranking.

Our data revealed a fascinating pattern known as the Conflict Suppression Cluster. This collection of posts, dominated by keywords like "Palestine" and "Gaza," has some of the greatest engagement-to-reach ratios, indicating a strong audience interest. Yet, these posts had persistently lower visibility than others, indicating systematic limits that limited their reach. Whether deliberate or the result of algorithmic prejudice, the outcome is the same: critical voices are marginalized.

Another significant pattern appeared in the Event-Sensitive Cluster, where content visibility fluctuated sharply around key conflict-related events. Posts with terms like “Palestine” and “Gaza” would briefly surge in engagement during major moments of public interest but quickly lose reach. This dynamic suggests that algorithms may adjust visibility in response to heightened interaction, suppressing contentious narratives shortly after they gain traction. This behaviour raises questions about whether platforms are managing perceived risks by muting sensitive topics during critical periods.

Interestingly, posts in both clusters often lacked broader, neutral keywords such as "humanitarian" or "aid. Instead, they focused on words directly related to the conflict, which appeared to result in algorithmic suppression. This distinction shows how platforms could apply stricter filtering to narratives about politically controversial issues while allowing less politically charged content to circulate more freely.

These discoveries are more than just technical observations; they reveal the inner workings of a system that selectively shapes public conversation. Through aggregating posts and analysing patterns, we have discovered how certain terms and themes associated with the Palestinian narrative serve as signals for content filtering, limiting their visibility and empowering only opposing views.

Balancing the scales: ensuring fair visibility in a digital age

Social media platforms' selective content suppression has a significant impact on public conversation, especially when it obscures marginalized or politically delicate perspectives. A report by 7amleh indicates that two-thirds of Palestinian youth fear expressing political opinions online, a chilling statistic that highlights the psychological and societal toll of these policies.

This digital repression not only diminishes global awareness of the Palestinian struggle for freedom but also undermines the ability of Palestinians to raise their voices about their cause. As advocacy groups like "Meta, Let Palestine Speak" and "Facebook, We Need to Talk" have pointed out, these policies interfere with justice, erasing evidence of Israeli human rights abuses and obstructing investigations into war crimes.

This often deprives the public of a comprehensive view of important issues, resulting in a fragmented and skewed conversation lacking a range of opinions and raising serious concerns about the openness of content filtering. To ensure a fair representation of global concerns, social media platforms must adopt transparent algorithmic practices.

Clear and straightforward algorithmic processes are necessary to promote accountability and justice. Transparency would encourage pluralism by making marginalized opinions visible, allowing varied viewpoints to contribute to a more balanced public discourse. Furthermore, openness about algorithms enhances user trust, which is an important factor in establishing healthy online communities because users see platforms as fair spaces. Users become active participants in discussions about global issues when they understand why certain content is prioritized and can make informed choices about what to engage with and share. Ultimately, transparency in algorithmic practices supports fair content moderation, enriches public discourse, and upholds social media’s role as a space for democratic dialogue and informed citizenry.

To create a fair digital space for Palestinian narratives and other war-related content, digital platforms and censors must adopt some key technologies that promote fair procedures and openness in decision-making. Civil society activists on the Palestinian side, such as the American-Arab Anti-Discrimination Committee, have escalated these issues to the Meta oversight board, demanding accountability and transparency in moderation practices. As such, transparent algorithmic practices are non-negotiable if we are to avoid bias and censorship of politically sensitive content. The moderation of Palestinian content by social media platforms must be disclosed so that organisations know how decisions are made about the visibility of issues, which narratives are suppressed and why.

More generally, there is a need for cross-regional analysis to assess whether Palestinian narratives experience particular or heightened suppression compared with other regions like the content about the war in Ukraine or the conflict in Sudan. It can help the platforms identify patterns and biases by conducting analyses of reach, engagement, and other data across geopolitical contexts in order to mitigate the uneven visibility of Palestinian content.

Long-term monitoring of reach and engagement on Palestinian-related posts would provide a more accurate picture of whether visibility varies owing to algorithmic modifications or political sensitivity, particularly during major events like protests or conflict escalation. Regulators have an important role to play in this process ensuring algorithmic transparency and accountability. Governments could create rules requiring platforms to explain their moderation practices, especially when Palestinian issues are involved. Routine algorithm audits would also be desirable, requiring independent assessments of bias.

Finally, safeguarding these efforts from political interference is crucial to maintaining the integrity of content moderation policies. By implementing these strategies, platforms like Meta can begin to repair the trust of marginalized communities and ensure that Palestinian voices are represented fairly in the global digital discourse. Only by balancing the scales of visibility can we ensure that digital spaces truly serve as platforms for inclusive and equitable dialogue.